在短影片、線上教育和自媒體內容爆炸性增長的今天,越來越多的創作者依賴自動字幕工具來提升內容的可讀性和分發效率。然而,你真的知道嗎? 這些字幕是由什麼AI產生的?它們的準確性、智能程度如何?背後的技術如何?

作為一個實際使用過多種字幕工具的內容創作者,本文將結合自身的測試經驗,為您解析字幕生成AI技術的原理、核心模型、應用場景、優缺點等。如果你想讓你的字幕更加專業、精準,並支援多語言輸出,本文將為你帶來全面而實用的答案。.

目錄

什麼是 Subtitle AI?

在數位視訊快速發展的今天,字幕生成早已不再依賴繁瑣的人工輸入流程。如今主流的字幕製作已經進入AI驅動的智慧化階段。那麼,什麼是字幕AI呢?它使用了哪些技術?又有哪些主流類型?

字幕生成AI,通常是指基於以下兩大核心技術所建構的智慧系統:

- ASR(自動語音辨識):用於將視訊、音訊中的語音內容準確轉錄為文字。.

- NLP(自然語言處理):用於斷句、增加標點、最佳化語言邏輯,使生成的字幕更具可讀性、語意更完整。.

兩者結合,AI可以自動識別 演講內容→同步產生字幕文字→精準對位時間碼. 這使得能夠有效率地產生標準字幕(例如.srt、.vtt 等),而無需人工口述。.

這正是YouTube、Netflix、Coursera、Tiktok等全球平台正在普遍使用的字幕AI技術。.

字幕AI的三種主要類型

| 類型 | 代表性工具/技術 | 描述 |

|---|---|---|

| 1. 識別人工智慧 | OpenAI Whisper、Google雲端語音轉文本 | 專注於語音到文字的轉錄、高精度、多語言支持 |

| 2. 翻譯人工智慧 | DeepL、Google翻譯、Meta NLLB | 用於將字幕翻譯成多種語言,依賴上下文理解 |

| 3. 生成+編輯AI | 易訂閱 (綜合多模型法) | 將識別、翻譯和時間對齊與可編輯輸出相結合;非常適合內容創作者 |

字幕 AI 如何運作?

步驟 1:語音辨識(ASR - 自動語音辨識)

這是字幕生成的第一步,也是最核心的一步.AI系統從視訊或音訊中獲取語音輸入,並透過深度學習模型進行分析,識別每句話的文字內容。 OpenAI Whisper和Google Speech-to-Text等主流技術都是基於大規模多語言語音資料進行訓練的。.

-1024x598.png)

第二步:自然語言處理(NLP)

人工智慧可以識別文本,但它往往是“機器語言”,沒有標點符號,沒有句子分隔符,可讀性差。.NLP模組的任務是對辨識出的文字進行語言邏輯處理,, 包括:

- 加入標點符號(句號、逗號、問號等)

- 拆分自然話語(每個字幕長度合理且易於閱讀)

- 糾正語法錯誤以提高流利度

這一步驟通常與語料庫和上下文語義理解建模相結合,使字幕更像“人類句子”」。.

步驟3:時間碼對齊

字幕不僅僅是文本,還必須與視頻內容精確同步. 這一步驟,AI會分析語音的開始和結束時間,產生每個字幕的時間軸資料(Start/End timecode),實現「聲語同步」。.

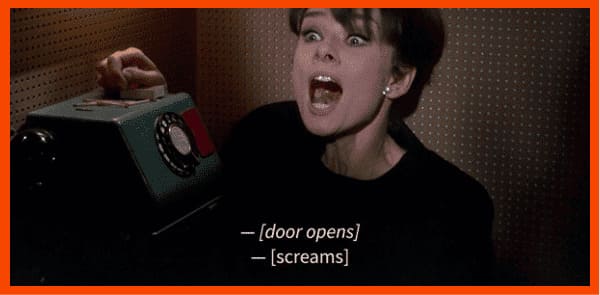

第四步:字幕格式輸出(如SRT/VTT/ASS等)

處理完文字和時間碼後,系統會將字幕內容轉換為標準化格式,以便於匯出、編輯或上傳到平台。常見格式包括:

- .srt:常見的字幕格式,支援大多數視訊平台

- .vtt:用於 HTML5 視頻,支援網頁播放器

- .ass:支援高級樣式(顏色、字體、位置等)

💡 易訂閱 支援多格式匯出,滿足YouTube、B站、TikTok等不同平台創作者的需求。.

主流字幕AI技術模型

隨著自動字幕技術的不斷發展,背後的AI模型也正在快速迭代。從語音辨識到語言理解,再到翻譯和結構化輸出,主流科技公司和AI實驗室已經建構了多個高度成熟的模型。.

對於內容創作者來說,了解這些主流模型將幫助您確定字幕工具背後的技術實力,並幫助您選擇最適合您需求的平台(如 Easysub)。.

| 模型/工具 | 組織 | 核心功能 | 應用程式描述 |

|---|---|---|---|

| 耳語 | OpenAI | 多語言自動語音識別 | 開源、高精度多語言字幕識別 |

| Google STT | Google雲 | 語音轉文字 API | 穩定的雲端API,用於企業級字幕系統 |

| 元 NLLB | 元人工智慧 | 神經翻譯 | 支援200+種語言,適合字幕翻譯 |

| DeepL翻譯器 | DeepL GmbH | 高品質機器翻譯 | 自然、準確的專業字幕翻譯 |

| Easysub AI流程 | Easysub(您的品牌) | 端對端字幕AI | 整合 ASR + NLP + 時間碼 + 翻譯 + 編輯流程 |

自動字幕AI技術的挑戰與解決方案

雖然 自動生成字幕 儘管取得了驚人的進步,但在實際應用中仍面臨許多技術挑戰和限制。尤其是在多語言、複雜內容、多樣口音或嘈雜的視訊環境中,AI「聽、懂、寫」的能力並不總是完美的。.

身為實踐使用字幕AI工具的內容創作者,我總結了在使用過程中遇到的一些典型問題,同時也研究了包括Easysub在內的工具和平台是如何應對這些挑戰的。.

挑戰一:口音、方言和模糊語音會影響辨識準確性

即使採用最先進的語音辨識模型,字幕也可能因為發音不標準、方言混雜或背景噪音等原因而被誤辨識。常見現象包括:

- 帶有印度、東南亞或非洲口音的英語影片可能會令人困惑。.

- 部分包含粵語、台語或四川方言的中文影片缺失。.

- 吵雜的視訊環境(例如戶外、會議、直播)使得AI無法準確分離人聲。.

Easysub的解決方案:

採用多模型融合辨識演算法(包含Whisper及本地自研模型),透過語言偵測+背景降噪+情境補償機制,提升辨識準確率。.

挑戰二:語言結構複雜,斷句不合理,字幕難以閱讀。.

AI 轉錄的文本如果缺乏標點符號和結構優化,常常會出現整段文字連在一起,毫無停頓感,甚至句子意思被切斷的現象,嚴重影響受眾理解。.

Easysub的解決方案:

Easysub內建NLP(自然語言處理)模組,利用預先訓練的語言模型對原文進行智慧斷句+標點符號+語意平滑處理,產生更符合閱讀習慣的字幕文字。.

挑戰三:多語字幕翻譯準確率不足

AI在將字幕翻譯成英語、日語、西班牙語等語言時,由於缺乏上下文,往往會產生機械、僵硬、脫離上下文的句子。.

Easysub的解決方案:

Easysub整合了DeepL/NLLB多模式翻譯系統,支援使用者進行譯後人工校對和多語言交叉引用模式編輯。.

挑戰4:輸出格式不統一

有些字幕工具只提供基本的文字輸出,無法匯出.srt、.vtt、.ass等標準格式,這就導致使用者需要手動轉換格式,影響使用效率。.

Easysub的解決方案:

支援導出 字幕文件 多種格式,一鍵切換風格,確保字幕在所有平台上無縫應用。.

-1024x351.png)

哪些產業最適合使用AI字幕工具?

AI自動字幕工具 不僅僅是YouTube用戶或影片部落客的專利。隨著影片內容的普及和全球化,越來越多的產業開始採用AI字幕來提高效率、觸達受眾並提升專業。.

- 教育與培訓(線上課程/教學影片/講座錄音)

- 企業內部溝通與培訓(會議記錄/內部培訓影片/專案報告)

- 海外短影片及跨國電商內容(YouTube/TikTok/Instagram)

- 媒體與電影製作產業(紀錄片/訪談/後製)

- 線上教育平台/SaaS工具開發人員(B2B內容+產品展示影片)

為什麼推薦 Easysub?它與其他字幕工具有何不同?

市面上的字幕工具琳瑯滿目,從YouTube的自動字幕,到專業的剪輯軟體插件,再到一些簡單的翻譯輔助工具…但很多人在使用過程中會發現:

- 有些工具的辨識率不高,句子有斷句的狀況。.

- 有些工具無法匯出字幕文件,無法重複使用。.

- 有些工具的翻譯品質很差,讀起來不太順暢。.

- 有些工具的介面複雜且不友好,一般使用者難以使用。.

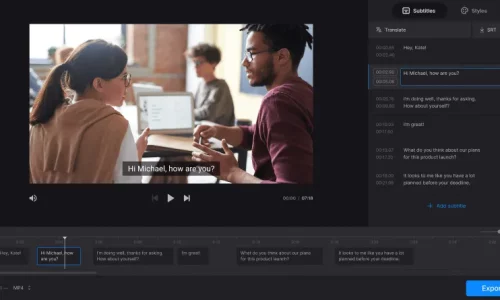

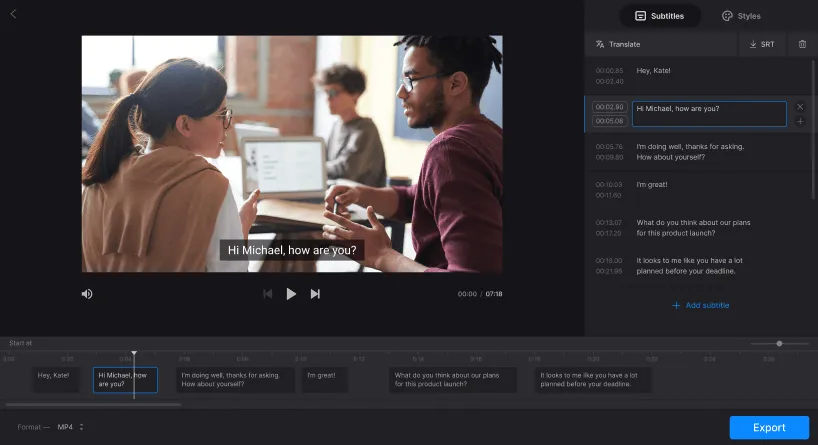

作為一名資深影片創作者,我測試過不少字幕工具,最終選擇並推薦 Easysub。因為它確實做到了以下 4 大優勢:

- 準確識別多語言語音並適應不同的口音和語境。.

- 視覺化字幕編輯+手動微調,靈活可控。.

- 支援30+種語言翻譯,適合海外及多語言使用者。.

- 全方位的輸出格式,相容於所有主流平台和編輯工具

| 功能類別 | 易訂閱 | YouTube 自動字幕 | 手動字幕編輯 | 通用AI字幕工具 |

|---|---|---|---|---|

| 語音識別準確率 | ✅ 高(多語言支援) | 中(適合英語) | 取決於技能水平 | 平均的 |

| 翻譯支持 | ✅ 是(30 多種語言) | ❌ 不支持 | ❌ 人工翻譯 | ✅ 部分 |

| 字幕編輯 | ✅ 可視化編輯器和微調 | ❌ 不可編輯 | ✅ 完全控制 | ❌ 編輯使用者體驗不佳 |

| 導出格式 | ✅ 支援 srt / vtt / ass | ❌ 禁止出口 | ✅ 靈活 | ❌ 格式有限 |

| 使用者介面友好 | ✅ 簡單、多語言的使用者介面 | ✅ 非常基礎 | ❌複雜的工作流程 | ❌ 通常只講英語 |

| 中文內容友好 | ✅ 針對 CN 進行了高度最佳化 | ⚠️需要改進 | ✅ 努力 | ⚠️ 翻譯不自然 |

立即開始使用 EasySub 來增強您的視頻

在內容全球化和短視訊爆炸性成長的時代,自動字幕已成為提高視訊可見度、可近性和專業性的關鍵工具。.

有了這樣的AI字幕生成平台 易訂閱, ,內容創作者和企業可以在更短的時間內製作出高品質、多語言、準確同步的視訊字幕,大大提高觀看體驗和分發效率。.

在內容全球化和短影片爆炸性成長的時代,自動字幕製作已成為提升影片可見度、可近性和專業度的關鍵工具。透過 Easysub 等 AI 字幕生成平台,內容創作者和企業能夠在更短的時間內製作出高品質、多語言、精準同步的影片字幕,從而顯著提升觀看體驗和發行效率。.

無論您是新手還是經驗豐富的創作者,Easysub 都能加速並增強您的內容創作。立即免費試用 Easysub,體驗 AI 字幕的高效智能,讓每個影片都能跨越語言界限,觸達全球受眾!

只需幾分鐘,即可讓 AI 為您的內容賦能!

👉 點此免費試用: easyssub.com

感謝您閱讀本部落格。. 如有更多問題或客製化需求,請隨時與我們聯繫!